Top 10 most commonly used Machine Learning algorithms in Quantitative Finance PART 1

Machine Learning in Quantitative Finance

Note: Article is divided on 2 parts, second releasing next week, ML algorithms are not ranked against each other they are rather a sequence set of the most widely used ML in Quantitative finance

Importance of Machine Learning rises year over year, taking by force the attention of the biggest banks and hedge funds as well as little proprietary trading firms that want to gain an edge over the competitors. Therefore, it is important to at the very least be aware of different ML algorithms that you are very likely to come across in context of Quantitative Finance

Machine learning is a field of artificial intelligence that focuses on developing algorithms and models that can learn patterns from data and make predictions or decisions without being explicitly programmed. The goal of machine learning is to enable computers to learn and improve from experience, so they can make accurate predictions or decisions on new, unseen data. Because of its nature it found wide range of application in Quantitative Analysis in almost, if not all its areas including risk management, asset pricing or future return of financial instruments.

In this as well as following article next week, I listed the most frequently used machine learning algorithms, bare in mind that they are not ranked between in other in terms of occurrence as it differs depending on factors like computational power, accessibility of data, size of datasets, type of company etc., the list rather aims to show overall trend and different use that may prove useful to various purposes across financial field.

1. Regression Analysis:

Source here

To start off the most basic, although still very powerful due to its universality and ease of use, regression analysis. This is a statistical technique used to estimate the relationship between a dependent variable and one or more independent variables. It allows to assess and clarify how the dependent variable's value might change if one of the independent variables is varied.

Advantages of using Regression Analysis:

Interpretability: Regression models are often easy to interpret, even by someone who has little to no experience in data analysis which can be helpful for understanding the relationship between variables and making decisions based on the results.

Flexibility: Regression analysis, Jack of all trades indeed, it can be used for a wide range of problems in quantitative finance, such as predicting asset returns, modeling credit risk, and estimating the impact of macroeconomic factors on financial markets.

Accuracy: When used appropriately, regression models can be very accurate at predicting financial outcomes.

Disadvantages of Regression Analysis:

Limited scope: Regression analysis assumes a linear relationship between the dependent variable and independent variables, which may not be appropriate for all financial problems. This can lead to inaccurate predictions and decisions.

Overfitting: Regression models can be prone to overfitting, which occurs when the model fits the training data too closely and fails to generalize to new data. This can lead to poor performance on new, unseen data.

Assumptions: Regression analysis assumes that the data is normally distributed and that the variables are independent of each other. These assumptions may not hold true in all financial problems, which can lead to biased or inaccurate results.

Data quality: Regression models require high-quality data to make accurate predictions. If the data is incomplete or contains errors, the model may not be able to make accurate predictions.

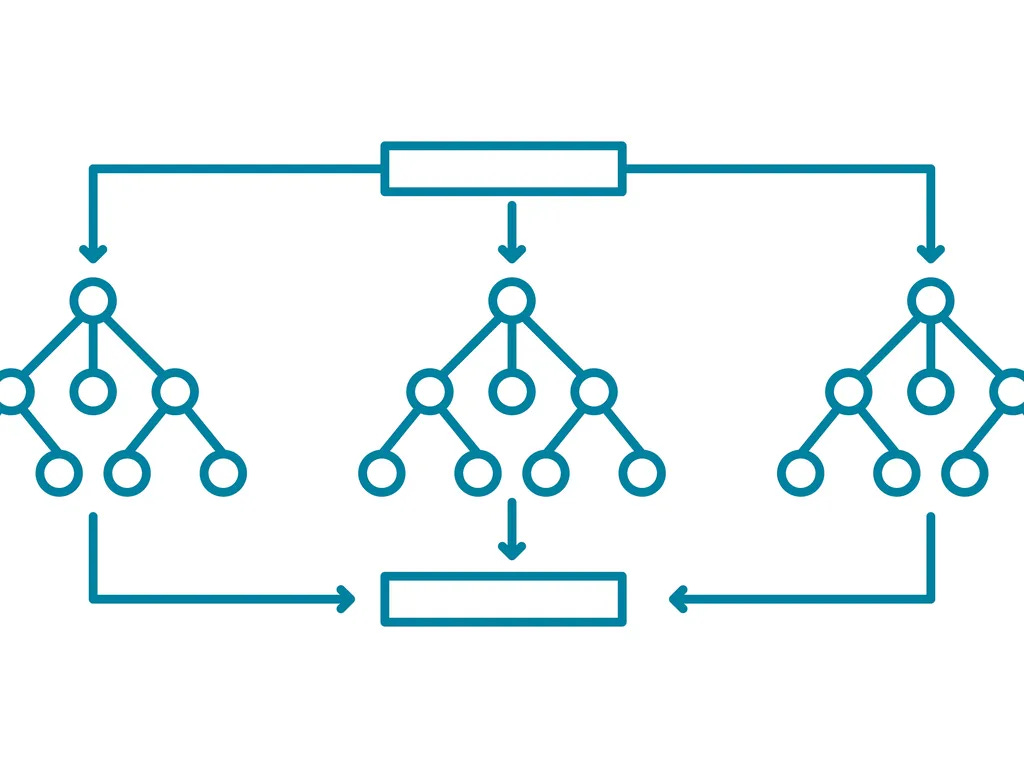

2. Random Forest:

Source here

A random forest is an ensemble learning method that uses multiple decision trees to make predictions. It’s list of applications is very extensive as it can be used in tasks as diverse as object recognition, credit risk assessment or purchase recommendations based on prior customer behaviour and in context of quantitative finance tasks such as portfolio optimization and risk management. In simple terms, forest is very descriptive name as entire algorithm is based on multiple decision tress that consist of conditional rules that outomes algorithm gathers in so called ‘bagging’ process to get the accurate and stable prediction. Bagging can be done by averaging the results when the outcome is a number – for example the expected return of a given stock.

Advantages of Random Forest method:

Accuracy: Random forest models can provide highly accurate predictions and have been shown to outperform other machine learning models in some financial applications.

Robustness: As counter back to Regression Analysis, random forest models are less prone to overfitting than other machine learning models, which can be important in finance where generalizability is key.

Non-linear modelling: Another argument in favour of RF against Regression Analysis is that Random forest models can capture non-linear relationships between variables, making them more flexible than linear regression models.

Feature importance: Random forest models can provide insights into which variables are most important for making predictions, which can be useful for understanding the underlying drivers of financial outcomes. Therefore as you can see, with proper Financial Market expertise RF can be really powerful.

Disadvantages of Random Forest method:

Interpretability: Random forest models can be difficult to interpret, making it challenging to understand how the model is making predictions.

Computationally expensive: Random forest models can be computationally expensive to train and can require significant computing resources. It is especially important having to deal with rising number of databases or when the speed of computation is decisive like in high-frequency trading

Sensitivity to hyperparameters: Random forest models have hyperparameters that need to be tuned for optimal performance, which can be time-consuming and require domain expertise.

Bias: Random forest models can be biased if the data used to train the model is biased or if certain variables are overrepresented in the training data. This can lead to inaccurate predictions and decisions.

3. Support Vector Machines (SVM):

Source here

SVM is a supervised learning algorithm used for classification and regression analysis. Supervised learning is based on necessity of firstly labelling the outcomes in training data so algorithm will label further based on learnt patterns. Based on the features of new data it labels them according to the threshold or separation line.

However, SVM does not necessarily have to be only linear classifier. There is a technique called kernel trick which enables to utilise SVM with vastly greater flexibility by introducing no linear set of decision boundaries. The kernel trick allows SVM algorithms to classify data points in a way that is not limited by the dimensionality of the feature space. Instead of explicitly transforming the data into a higher-dimensional space, the kernel trick uses a function that computes the dot product between pairs of data points in that space, without actually calculating the coordinates of the data points in that space

Advantages of Support Vector Machines (SVM):

Non-linear modelling: SVM can capture non-linear relationships between variables, making them more flexible than linear models like linear regression.

High accuracy: SVM can provide highly accurate predictions and have been shown to outperform other machine learning models in some financial applications.

Robustness: SVM is less prone to overfitting than other machine learning models, which can be important in finance where generalizability is key.

Ability to handle high-dimensional data: SVM can handle high-dimensional data, where explicitly transforming the data into a higher-dimensional space would be computationally expensive.

Disadvantages of Support Vector Machines (SVM), for mitigating most of them kernel trick will prove to be useful however, those issues will still remain:

Computationally expensive: SVM can be computationally expensive to train and can require significant computing resources, especially for large datasets.

Sensitivity to hyperparameters: SVM has hyperparameters that need to be tuned for optimal performance, which can be time-consuming and require domain expertise.

Interpretability: SVM can be difficult to interpret, making it challenging to understand how the model is making predictions.

Limited applicability to multi-class problems: SVM is primarily designed for binary classification problems, and its use in multi-class classification problems can be more challenging and less accurate than other machine learning models

4. Neural Networks:

Source here

Neural networks are a class of machine learning algorithms inspired by the structure and function of the human brain. The biological neurons emit an electrical pulse when given kind of chemical substance reaches a certain level. It can be translated into mathematical terms as Neurons weight and sum up inputs (our chemical substances), and then offsets it against given threshold. Followingly if the sums of weighted features will exceed given threshold the case will be labelled differently than cases below the threshold.

Advantages of using Neural Networks for Quantitative Analysis:

Non-linear modelling: Neural networks can capture non-linear relationships between variables, making them more flexible than linear models like linear regression.

High accuracy: This algorithm can provide highly accurate predictions and have been shown to outperform other machine learning models in some financial applications.

Robustness: NN can be less prone to overfitting than other machine learning models, which can be important in finance where generalizability is key.

Ability to handle large and complex data: Neural Networks can handle large and complex datasets, including unstructured data such as text or images.

Disadvantages of using Neural Networks for Quantitative Analysis:

Computationally expensive: As previous models, neural networks can be computationally expensive to train and can require significant computing resources, especially for large and complex datasets.

Sensitivity to hyperparameters: NN is no exception in terms of hyperparameters bias, hyperparameters need to be tuned for optimal performance, which can be time-consuming and require domain expertise.

Interpretability: NN can be difficult to interpret, making it challenging to understand how the model is making predictions.

Data quality and bias: Neural networks can be sensitive to biases in the data, and the use of large and complex datasets can increase the risk of data quality issues that can impact the accuracy of the model.

Overfitting: Lastly, this algorithm can be prone to overfitting if the model is too complex or if the dataset is too small, which can lead to poor generalizability and inaccurate predictions on new data

5. Bayesian Networks:

Source here

Bayesian networks is the first model on this list that implements probability theorems as it uses probabilistic relationships among variables as its core. It examines the probability of outcome based on number of occurrences of similar features and indicators in test-data that correlated with final result. Naïve Bayes model is the example of Bayesian Network and at its core focuses only on probability of outcome based on correlation with circumstances that resulted in the same outcome in training dataset. They are often used in quantitative finance for tasks such as credit risk modelling, fraud detection for example assessing investor sentiment based on the comments (Stay tuned we will implement this one for examining investor analysis based on twitter comments later on in this blog)

Advantages of using Bayesian networks:

Probabilistic modelling: Bayesian networks are a probabilistic model that can capture uncertainty and variability in the data, making them well-suited for financial applications where uncertainty is inherent which makes it highly applicable for financial forecasts.

Transparency: Bayesian networks can also provide transparency into the relationships between variables, allowing users to understand how the model is making predictions.

Interpretability: This model can be relatively easy to interpret (by a user), making them well-suited for applications where understanding the underlying factors driving the model's predictions is important.

Flexible modelling: Bayes can be flexible in their modelling approach, allowing for the incorporation of domain-specific knowledge and the use of prior probabilities to inform the model.

Disadvantages of using Bayesian networks

Limited scalability: Here we go again, Bayesian models can be computationally expensive for large datasets, and their performance can be impacted by the size of the dataset.

Data quality and bias: Bayesian networks can be sensitive to biases in the data, and the use of large and complex datasets can increase the risk of data quality issues that can impact the accuracy of the model.

Complexity: I mentioned in advantages that Bayesian networks is easy to interpret, however it can be complex to construct and require domain expertise in order to effectively build and interpret.

Limited applicability to non-probabilistic problems: Bayesian networks are primarily designed for probabilistic modelling problems, and their use in non-probabilistic modelling problems may be less accurate or appropriate than other machine learning models.

Prior specification: Finally, Bayesian networks require the specification of prior probabilities, which can be challenging to determine in some applications and may introduce additional sources of uncertainty into the model.

That concludes the first part of top 10 most commonly used ML models in quantitative finance. As you can see ML applications can vary and it is strictly determined by its means. By this post I was attempting to give yu brief overview and comparison between the most common one which at the very least you should be aware of as ML influence on Finance industry will undoubtedly rise, so rise with that as well towards your goals and let technology enhance your work so you could get full advantage of wonderful tools that lie just within reach of our hands.

Stay tuned for the second part coming up next week!

Subscribe to not miss out

Thanks,

Tomasz